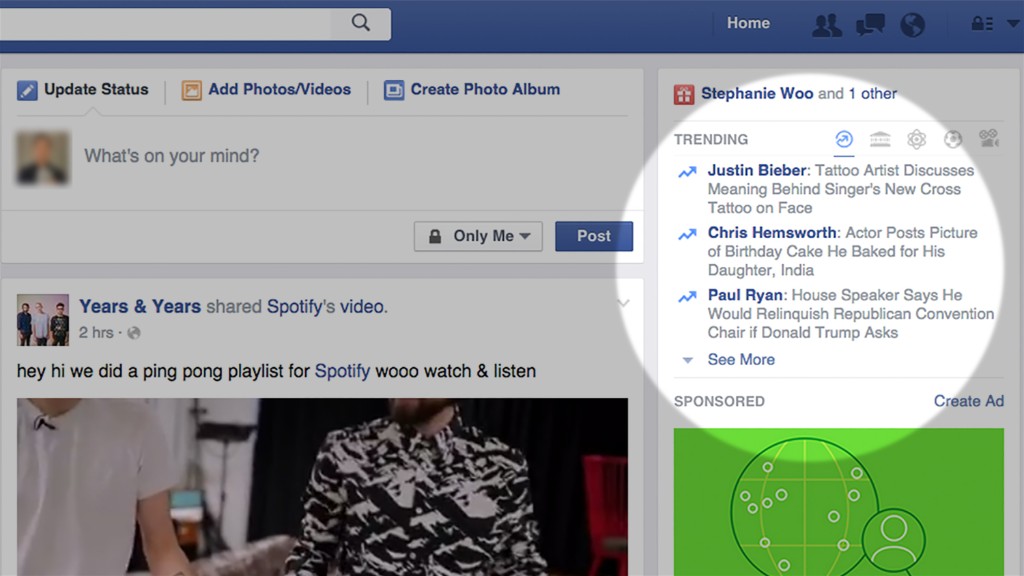

Algorithm

a process or set of rules to be followed in calculations or other problem-solving operations, especially by a computer. - Definition from Oxford Languages

Face Filter

a mask-like augmented reality that adds virtual objects to an individual's face - Definition from onezero.medium.com

Implicit Bias

an unconscious belief about a group of people - verywellmind.com